Pods

Pods

Create Namespace and change context

Begin creating namespace

kubectl create namespace namespace01

namespace/namespace01 created

Let’s change the context

kubectl config set-context --current --namespace namespace01

Context "xxxxx@xxxxxx" modified.

Sidecar Container

Create a Pod with a sidecar container that expose the log file:

apiVersion: v1

kind: Pod

metadata:

name: sidecar-pod

spec:

volumes:

- name: logs-sharing

emptyDir: {}

containers:

- name: main-app-container

image: r.deso.tech/library/alpine

command: ["/bin/sh"]

args: ["-c", "while true; do date >> /var/log/app.txt; sleep 5;done"]

volumeMounts:

- name: logs-sharing

mountPath: /var/log

- name: sidecar-container

image: r.deso.tech/library/nginx

ports:

- containerPort: 80

volumeMounts:

- name: logs-sharing

mountPath: /usr/share/nginx/html

Apply the Pod:

kubectl apply -f sidecar-pod.yaml

pod/sidecar-pod created

kubectl get pod -w

NAME READY STATUS RESTARTS AGE sidecar-pod 0/2 ContainerCreating 0 7s sidecar-pod 2/2 Running 0 28s

To stop the command, hit ++ctrl++ + ++c++.

Connect to the sidecar container:

kubectl exec sidecar-pod -c sidecar-container -it -- /bin/bash

You will enter in the bash of the sidecar-container

root@sidecar-pod:/#

Now install curl and access the log file from the sidecar container.

apt-get update && apt-get install curl

<output_omitted>

curl http://localhost/app.txt

(date every 5 sec.) . . .

Now exit from the container’s shell:

exit

Delete the container:

kubectl delete -f sidecar-pod.yaml

Adaptive Container

Create a Pod with an adapter container to reformatting pattern:

apiVersion: v1

kind: Pod

metadata:

name: adapter-pod

spec:

volumes:

- name: logs-sharing

emptyDir: {}

containers:

- name: main-app-container

# This application writes system usage information (`top`) to a status

# file every five seconds.

image: r.deso.tech/library/alpine

command: ["/bin/sh"]

args: ["-c", "while true; do date > /var/log/top.txt && top -n 1 -b >> /var/log/top.txt; sleep 5;done"]

volumeMounts:

- name: logs-sharing

mountPath: /var/log

- name: adapter-container

# Our adapter container will inspect the contents of the app's top file,

# reformat it, and write the correctly formatted output to the status file

image: r.deso.tech/library/alpine

command: ["/bin/sh"]

args: ["-c", "while true; do (cat /var/log/top.txt | head -1 > /var/log/status.txt) && (cat /var/log/top.txt | head -2 | tail -1 | grep

-o -E '\\d+\\w' | head -1 >> /var/log/status.txt) && (cat /var/log/top.txt | head -3 | tail -1 | grep

-o -E '\\d+%' | head -1 >> /var/log/status.txt); sleep 5; done"]

volumeMounts:

- name: logs-sharing

mountPath: /var/log

Apply the pod:

kubectl apply -f adapter-pod.yaml

pod/adapter-pod created

kubectl get pod -w

NAME READY STATUS RESTARTS AGE adapter-pod 0/2 ContainerCreating 0 6s adapter-pod 2/2 Running 0 90s

Connect to the main-application container and check the contents of the logs and their formatting:

kubectl exec adapter-pod -c main-app-container -it -- /bin/sh

/ #

Check top output first:

cat /var/log/top.txt

Mem: 2902696K used, 1125800K free, 12952K shrd, 100276K buff, 1820668K cached CPU: 0% usr 0% sys 0% nic 100% idle 0% io 0% irq 0% sirq Load average: 0.41 0.30 0.36 1/731 608 PID PPID USER STAT VSZ %VSZ CPU %CPU COMMAND 349 341 root S 1624 0% 3 0% sh 1 0 root S 1616 0% 3 0% /bin/sh -c while true; do date > /var/log/top.txt && top -n 1 -b >> /var/log/top.txt; sleep 5;done 608 1 root R 1556 0% 1 0% top -n 1 -b

Then the reformatted pattern for a status view:

cat /var/log/status.txt

2902728K 4%

Now exit from shell container:

exit

Delete the container:

kubectl delete -f adapter-pod.yaml

Pod — Exit 0 - restartPolicy: Always

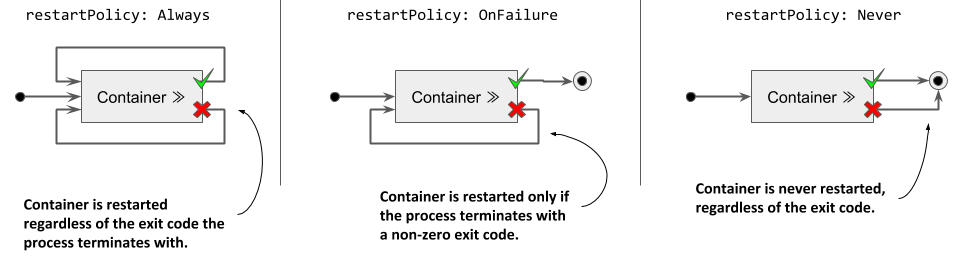

A pod has a restartPolicy field with possible values Always, OnFailure, and Never.

The default value is Always.

The restart policy applies to all of the containers in the pod.

Failed containers are restarted on the same node with a delay that grows exponentially up to 5 minutes.

Now you can create the configuration file for your pod:

apiVersion: v1

kind: Pod

metadata:

name: mypod-1

labels:

app: myapp

spec:

containers:

- name: mypod-1-container

image: r.deso.tech/library/alpine

command: ['sh', '-c', 'echo The Pod is running && sleep 10']

To better understand the concept, you should have two terminal consoles open.

Open another terminal window and run:

kubectl get pod -w

On the first terminal, create the pod

kubectl apply -f mypod-1.yaml

pod/mypod-1 created

Let’s investigate the Pod status over time:

kubectl get pod -w

NAME READY STATUS RESTARTS AGE mypod-1 0/1 Pending 0 0s mypod-1 0/1 ContainerCreating 0 2s mypod-1 1/1 Running 0 3s mypod-1 0/1 Completed 0 13s mypod-1 1/1 Running 1 16s mypod-1 0/1 Completed 1 26s mypod-1 0/1 CrashLoopBackOff 1 41s mypod-1 1/1 Running 2 42s mypod-1 0/1 Completed 2 52s mypod-1 1/1 Running 3 78s mypod-1 0/1 Completed 3 88s mypod-1 0/1 CrashLoopBackOff 3 102s mypod-1 1/1 Running 4 2m19s mypod-1 0/1 Completed 4 2m29s mypod-1 0/1 CrashLoopBackOff 4 2m42s

Our Pod stays in running status for 10 seconds. Then the status turns to Completed and immediately try to start

again.

Note the lines state ready: 1/1. This means that 1 container out of 1 container in the Pod is ready: running and able to be attached to, ssh’ed into, and so on.

The other lines states: ready 0/1 … Pod no longer ready ( for interactive use ) … it is completed and after terminate

in CrashLoopBackOff.

A CrashloopBackOff means that you have a pod starting, crashing, starting again, and then crashing again. We can

describe the pod for see some more information:

kubectl describe pod mypod-1

Name: mypod-1 Namespace: namespace01 Priority: 0 Node: k8s-worker01/10.10.99.214 Start Time: Mon, 07 Dec 2020 06:37:10 +0100 Labels: app=myapp Annotations: cni.projectcalico.org/podIP: 192.168.79.70/32 cni.projectcalico.org/podIPs: 192.168.79.70/32 Status: Running IP: 192.168.79.70 IPs: IP: 192.168.79.70 Containers: mypod-1-container: Container ID: docker://d4a8f4a21e9aaaa6dd0babc52da3bee2eb17ccade1a95f957fafb196654aa2a5 Image: r.deso.tech/library/alpine Image ID: docker-pullable://alpine@sha256:c0e9560cda118f9ec63ddefb4a173a2b2a0347082d7dff7dc14272e7841a5b5a Port: <none> Host Port: <none> Command: sh -c echo The Pod is running && sleep 10 State: Waiting Reason: CrashLoopBackOff Last State: Terminated Reason: Completed Exit Code: 0 Started: Mon, 07 Dec 2020 06:44:05 +0100 Finished: Mon, 07 Dec 2020 06:44:15 +0100 Ready: False Restart Count: 6

Easily understandable:

- Status: Running … The pod still to run.

- State: Waiting … lower level detail state detail

- Reason:

CrashLoopBackOff… pod is terminated since it COMPLETED and no more containers are running inside pod — that is the reason - Exit Code: 0 … final overall success exit code for the Pod

- Started: 06:44:05 and Finished: 06:44:15 : Pod sleeps for 10 seconds

- Ready: False … Pod no longer ready … it is terminated

- Restart Count: 6 … everytime pod complete it try to restart again …

A PodSpec has a restartPolicy field with possible values Always, OnFailure, and Never which applies to all

containers in a pod. The default value is Always and the restartPolicy only refers to restarts of the containers by

the kubelet on the same node (so the restart count will reset if the pod is rescheduled in a different node). Failed

containers that are restarted by the kubelet are restarted with an exponential back-off delay (10s, 20s, 40s …)

capped at five minutes, and is reset after ten minutes of successful execution.

Now delete the pod:

kubectl delete pod mypod-1 --force --grace-period=0

warning: Immediate deletion does not wait for confirmation that the running resource has been terminated. The resource may continue to run on the cluster indefinitely. pod "mypod-1" force deleted

kubectl get pod

No resources found in namespace01 namespace.

Pod — Exit 0 - restartPolicy: Never

Now create a new file:

apiVersion: v1

kind: Pod

metadata:

name: mypod-2

labels:

app: myapp

spec:

containers:

- name: mypod-2-container

image: r.deso.tech/library/alpine

imagePullPolicy: IfNotPresent

command: ['sh', '-c', 'echo The Pod is running && sleep 10']

restartPolicy: Never

Now open a second terminal and run:

kubectl get pod -w

On the first terminal, create the pod.

kubectl apply -f mypod-2.yaml

pod/mypod-2 created

Let’s investigate the Pod status:

kubectl get pod -w

NAME READY STATUS RESTARTS AGE mypod-2 0/1 Pending 0 0s mypod-2 0/1 Pending 0 0s mypod-2 0/1 ContainerCreating 0 0s mypod-2 0/1 ContainerCreating 0 2s mypod-2 1/1 Running 0 3s mypod-2 0/1 Completed 0 14s

Our Pod stays in running status for 10 seconds. Then the status turns to Completed and do not start again.

Output the describe command on the pod named mypod-2 with ONLY important status fields shown:

kubectl describe pod mypod-2

Name: mypod-2 Namespace: namespace01 Priority: 0 Node: k8s-worker01/10.10.99.214 Start Time: Mon, 07 Dec 2020 07:39:31 +0100 Labels: app=myapp Annotations: cni.projectcalico.org/podIP: 192.168.79.91/32 cni.projectcalico.org/podIPs: 192.168.79.91/32 Status: Succeeded IP: 192.168.79.91 IPs: IP: 192.168.79.91 Containers: mypod-2-container: Container ID: docker://b9b79b300ece8bf9ea6654268eedb9a35f55a4f5c5c86ecaf670b3f48f827785 Image: r.deso.tech/library/alpine Image ID: docker-pullable://alpine@sha256:c0e9560cda118f9ec63ddefb4a173a2b2a0347082d7dff7dc14272e7841a5b5a Port: <none> Host Port: <none> Command: sh -c echo The Pod is running && sleep 10 State: Terminated Reason: Completed Exit Code: 0 Started: Mon, 07 Dec 2020 07:39:33 +0100 Finished: Mon, 07 Dec 2020 07:39:43 +0100 Ready: False Restart Count: 0

Easily understandable:

- Status: Succeeded … The pod successfully complete the process.

- State: Terminated … The containers running inside the pod complete the process and terminate itself.

- Reason: Completed … Pod is terminated since it COMPLETED — that is the reason

- Exit Code: 0 … final overall success exit code for the Pod

- Started: 07:39:33 and Finished: 07:39:43 : Pod sleeps for 10 seconds

- Ready: False … Pod no longer ready … it is terminated

- Restart Count: 0 … you changed the restartPolicy to Never …

Now delete the pod:

kubectl delete pod mypod-2 --force --grace-period=0

warning: Immediate deletion does not wait for confirmation that the running resource has been terminated. The resource may continue to run on the cluster indefinitely. pod "mypod-2" force deleted

kubectl get pod

No resources found in namespace01 namespace.

Pod — Exit 1 - restartPolicy: Never

Let’s create a new pod:

apiVersion: v1

kind: Pod

metadata:

name: mypod-3

labels:

app: myapp

spec:

containers:

- name: mypod-3-container

image: r.deso.tech/library/alpine

imagePullPolicy: IfNotPresent

command: ['sh', '-c', 'echo The Pod was running && exit 1']

restartPolicy: Never

Apply the configuration

kubectl apply -f mypod-3.yaml

pod/mypod-3 created

List the pod

kubectl get pod

NAME READY STATUS RESTARTS AGE mypod-3 0/1 Error 0 4s

As you can see, the pod is in error.

kubectl describe pod mypod-3

Name: mypod-3 Namespace: namespace01 Priority: 0 Node: k8s-worker01/10.10.99.214 Start Time: Mon, 07 Dec 2020 08:00:37 +0100 Labels: app=myapp Annotations: cni.projectcalico.org/podIP: 192.168.79.72/32 cni.projectcalico.org/podIPs: 192.168.79.72/32 Status: Failed IP: 192.168.79.72 IPs: IP: 192.168.79.72 Containers: mypod-3-container: Container ID: docker://c27bb901bf0e007132dae4b598c2624ee2b97c75e5e3df7a63bf0776f15a571f Image: r.deso.tech/library/alpine Image ID: docker-pullable://alpine@sha256:c0e9560cda118f9ec63ddefb4a173a2b2a0347082d7dff7dc14272e7841a5b5a Port: <none> Host Port: <none> Command: sh -c echo The Pod was running && exit 1 State: Terminated Reason: Error Exit Code: 1 Started: Mon, 07 Dec 2020 08:00:40 +0100 Finished: Mon, 07 Dec 2020 08:00:40 +0100 Ready: False Restart Count: 0

Status: Failed State: Terminated because of Reason: Error caused by Exit Code: 1 started 08:00:40 and finished 08:00:40 zero seconds runtime. Pod exited with error immediately.

Now delete the pod.

kubectl delete pod mypod-3 --force --grace-period=0

warning: Immediate deletion does not wait for confirmation that the running resource has been terminated. The resource may continue to run on the cluster indefinitely. pod "mypod-3" force deleted

kubectl get pod

No resources found in namespace01 namespace.

Pod — Exit 0 - restartPolicy: OnFailure

Now create a new file:

apiVersion: v1

kind: Pod

metadata:

name: mypod-4

labels:

app: myapp

spec:

containers:

- name: mypod-4-container

image: r.deso.tech/library/alpine

imagePullPolicy: IfNotPresent

command: ['sh', '-c', 'echo The Pod is running && sleep 4']

restartPolicy: OnFailure

Almost the same file, but we change the time of sleeping to 3 secs and the restartPolicy to OnFailure.

Open a second terminal and run:

kubectl get pod -w

On the first terminal, create the pod

kubectl apply -f mypod-4.yaml

pod/mypod-4 created

Let’s investigate the Pod status:

kubectl get pod -w

NAME READY STATUS RESTARTS AGE mypod-4 0/1 Pending 0 0s mypod-4 0/1 Pending 0 0s mypod-4 0/1 ContainerCreating 0 0s mypod-4 0/1 ContainerCreating 0 2s mypod-4 1/1 Running 0 3s mypod-4 0/1 Completed 0 5s

Run describe to get the Pod status.

kubectl describe pod mypod-4

Name: mypod-4 Namespace: namespace01 Priority: 0 Node: k8s-worker01/10.10.99.214 Start Time: Mon, 07 Dec 2020 08:43:32 +0100 Labels: app=myapp Annotations: cni.projectcalico.org/podIP: 192.168.79.111/32 cni.projectcalico.org/podIPs: 192.168.79.111/32 Status: Succeeded IP: 192.168.79.111 IPs: IP: 192.168.79.111 Containers: mypod-4-container: Container ID: docker://fabc4f496fbec5cdd6c5e00a6baa8f19f526fddb708d04188ef99f95e4c68542 Image: r.deso.tech/library/alpine Image ID: docker-pullable://alpine@sha256:c0e9560cda118f9ec63ddefb4a173a2b2a0347082d7dff7dc14272e7841a5b5a Port: <none> Host Port: <none> Command: sh -c echo The Pod is running && sleep 3 State: Terminated Reason: Completed Exit Code: 0 Started: Mon, 07 Dec 2020 08:43:35 +0100 Finished: Mon, 07 Dec 2020 08:43:38 +0100 Ready: False Restart Count: 0

As you can see the Pod Exit with code 0 and complete the process without any failure. So the pod did not restart again the container.

Now delete the pod.

kubectl delete pod mypod-4 --force --grace-period=0

warning: Immediate deletion does not wait for confirmation that the running resource has been terminated. The resource may continue to run on the cluster indefinitely. pod "mypod-4" force deleted

Pod — Exit 1 - restartPolicy: OnFailure

Create a new file:

apiVersion: v1

kind: Pod

metadata:

name: mypod-5

labels:

app: myapp

spec:

containers:

- name: mypod-5-container

image: r.deso.tech/library/alpine

imagePullPolicy: IfNotPresent

command: ['sh', '-c', 'echo The Pod was running && exit 1']

restartPolicy: OnFailure

Almost the same file, but we change the exit status to 1.

Open a second terminal and run:

kubectl get pod -w

On the first terminal, create the pod

kubectl apply -f mypod-5.yaml

pod/mypod-5 created

Let’s investigate the Pod status on the second terminal:

NAME READY STATUS RESTARTS AGE mypod-5 0/1 Pending 0 0s mypod-5 0/1 Pending 0 0s mypod-5 0/1 ContainerCreating 0 0s mypod-5 0/1 ContainerCreating 0 2s mypod-5 0/1 Error 0 3s mypod-5 0/1 Error 1 5s mypod-5 0/1 CrashLoopBackOff 1 6s mypod-5 0/1 Error 2 19s mypod-5 0/1 CrashLoopBackOff 2 29s mypod-5 0/1 Error 3 42s mypod-5 0/1 CrashLoopBackOff 3 54s mypod-5 0/1 Error 4 96s mypod-5 0/1 CrashLoopBackOff 4 111s

Because of the failure of the container the pod get restarted.

Now delete the pod.

kubectl delete pod mypod-5 --force --grace-period=0

warning: Immediate deletion does not wait for confirmation that the running resource has been terminated. The resource may continue to run on the cluster indefinitely. pod "mypod-5" force deleted

Clean Up

Let’s clean up the lab now!

kubectl config set-context --current --namespace default

Context "xxxxx@xxxxxx" modified.

kubectl delete namespace namespace01

namespace/namespace01 deleted